views

A Brain-Inspired Chip Can Run AI With Far Less Energy | Quanta Magazine

November 10, 2022

Save ArticleRead Later

Señor Salme for Quanta Magazine

Contributing Writer

November 10, 2022

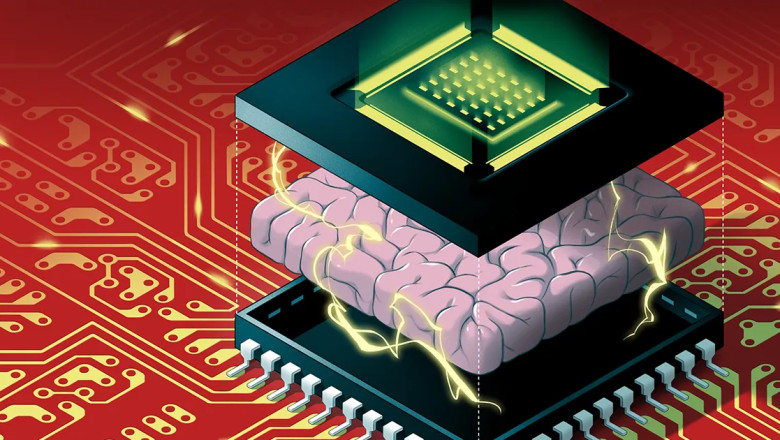

Artificial intelligence algorithms cannot keep growing at their current pace. Algorithms like deep neural networks — which are loosely inspired by the brain, with multiple layers of artificial neurons linked to each other via numerical values called weights — get bigger every year. But these days, hardware improvements are no longer keeping pace with the enormous amount of memory and processing capacity required to run these massive algorithms. Soon, the size of AI algorithms may hit a wall.

And even if we could keep scaling up hardware to meet the demands of AI, there’s another problem: running them on traditional computers wastes an enormous amount of energy. The high carbon emissions generated from running large AI algorithms is already harmful for the environment, and it will only get worse as the algorithms grow ever more gigantic.

One solution, called neuromorphic computing, takes inspiration from biological brains to create energy-efficient designs. Unfortunately, while these chips can outpace digital computers in conserving energy, they’ve lacked the computational power needed to run a sizable deep neural network. That’s made them easy for AI researchers to overlook.

That finally changed in August, when Weier Wan, H.-S. Philip Wong, Gert Cauwenberghs and their colleagues revealed a new neuromorphic chip called NeuRRAM that includes 3 million memory cells and thousands of neurons built into its hardware to run algorithms. It uses a relatively new type of memory called resistive RAM, or RRAM. Unlike previous RRAM chips, NeuRRAM is programmed to operate in an analog fashion to save more energy and space. While digital memory is binary — storing either a 1 or a 0 — analog memory cells in the NeuRRAM chip can each store multiple values along a fully continuous range. That allows the chip to store more information from massive AI algorithms in the same amount of chip space.

As a result, the new chip can perform as well as digital computers on complex AI tasks like image and speech recognition, and the authors claim it is up to 1,000 times more energy efficient, opening up the possibility for tiny chips to run increasingly complicated algorithms within small devices previously unsuitable for AI like smart watches and phones.

Researchers not involved in the work have been deeply impressed by the results. “This paper is pretty unique,” said Zhongrui Wang, a longtime RRAM researcher at the University of Hong Kong. “It makes contributions at different levels — at the device level, at the circuit architecture level, and at the algorithm level.”

In digital computers, the huge amounts of energy wasted while they run AI algorithms is caused by a simple and ubiquitous design flaw that makes every single computation inefficient. Typically, a computer’s memory — which holds the data and numerical values it crunches during computation — is placed on the motherboard away from the processor, where computing takes place.

For the information coursing through the processor, “it’s kind of like you spend eight hours on the commute, but you do two hours of work,” said Wan, a computer scientist formerly at Stanford University who recently moved to the AI startup Aizip.

TwitterCopied!Copy linkEmailPocketRedditYcombinatorFlipboardNewsletter

Get Quanta Magazine delivered to your inbox

The NeuRRAM chip can run computations within its memory, where it stores data not in traditional binary digits, but in an analog spectrum.

David Baillot/UCSD

Fixing this problem with new all-in-one chips that put memory and computation in the same place seems straightforward. It’s also closer to how our brains likely process information, since many neuroscientists believe that computation happens within populations of neurons, while memories are formed when the synapses between neurons strengthen or weaken their connections. But creating such devices has proved difficult, since current forms of memory are incompatible with the technology in processors.

Computer scientists decades ago developed the materials to create new chips that perform computations where memory is stored — a technology known as compute-in-memory. But with traditional digital computers performing so well, these ideas were overlooked for decades.

“That work, just like most scientific work, was kind of forgotten,” said Wong, a professor at Stanford.

Indeed, the first such device dates back to at least 1964, when electrical engineers at Stanford discovered they could manipulate certain materials, called metal oxides, to turn their ability to conduct electricity on and off. That’s significant because a material’s ability to switch between two states provides the backbone for traditional memory storage. Typically, in digital memory, a state of high voltage corresponds to a 1, and low voltage to a 0.

To get an RRAM device to switch states, you apply a voltage across metal electrodes hooked up to two ends of the metal oxide. Normally, metal oxides are insulators, which means they don’t conduct electricity. But with enough voltage, the current builds up, eventually pushing through the material’s weak spots and forging a path to the electrode on the other side. Once the current has broken through, it can flow freely along that path.

Wong likens this process to lightning: When enough charge builds up inside a cloud, it quickly finds a low-resistance path and lightning strikes. But unlike with lightning, whose path disappears, the path through the metal oxide remains, meaning it stays conductive indefinitely. And it’s possible to erase the conductive path by applying another voltage to the material. So researchers can switch an RRAM between two states and use them to store digital memory.

Midcentury researchers didn’t recognize the potential for energy-efficient computing, nor did they need it yet with the smaller algorithms they were working with. It took until the early 2000s, with the discovery of new metal oxides, for researchers to realize the possibilities.

Wong, who was working at IBM at the time, recalls that an award–winning colleague working on RRAM admitted he didn’t fully understand the physics involved. “If he doesn’t understand it,” Wong remembers thinking, “maybe I should not try to understand it.”

But in 2004, researchers at Samsung Electronics announced that they had successfully integrated RRAM memory built on top of a traditional computing chip, suggesting that a compute-in-memory chip might finally be possible. Wong resolved to at least try.

For more than a decade, researchers like Wong worked to build up RRAM technology to the point where it could reliably handle high-powered computing tasks. Around 2015, computer scientists began to recognize the enormous potential of these energy-efficient devices for large AI algorithms, which were beginning to take off. That year, scientists at the University of California, Santa Barbara showed that RRAM devices could do more than just store memory in a new way. They could execute basic computing tasks themselves — including the vast majority of computations that take place within a neural network’s artificial neurons, which are simple matrix multiplication tasks.

In the NeuRRAM chip, silicon neurons are built into the hardware, and the RRAM memory cells store the weights — the values representing the strength of the connections between neurons. And because the NeuRRAM memory cells are analog, the weights that they store represent the full range of resistance states that occur while the device switches between a low-resistance to a high-resistance state. This enables even higher energy efficiency than digital RRAM memory can achieve because the chip can run many matrix computations in parallel — rather than in lockstep one after another, as in the digital processing versions.

But since analog processing is still decades behind digital processing, there are still many issues to iron out. One is that analog RRAM chips must be unusually precise since imperfections on the physical chip can introduce variability and noise. (For traditional chips, with only two states, these imperfections don’t matter nearly as much.) That makes it significantly harder for analog RRAM devices to run AI algorithms, given that the accuracy of, say, recognizing an image will suffer if the conductive state of the RRAM device isn’t exactly the same every time.

“When we look at a lighting path, every time it’s different,” said Wong. “So as a result of that, the RRAM exhibits a certain degree of stochasticity — every time you program them is slightly different.” Wong and his colleagues proved that RRAM devices can store continuous AI weights and still be as accurate as digital computers if the algorithms are trained to get used to the noise they encounter on the chip, an advance that enabled them to produce the NeuRRAM chip.

From top, H.-S. Philip Wong, Weier Wan and Gert Cauwenberghs (not pictured) helped build a new kind of computer chip that can run huge AI algorithms with model efficiency.

(top) Courtesy of Philip Wong; Paul Jin

H.-S. Philip Wong (left), Weier Wan and Gert Cauwenberghs (not pictured) helped build a new kind of computer chip that can run huge AI algorithms with unprecedented efficiency.

Courtesy of Philip Wong (left); Paul Jin

Another major issue they had to solve involved the flexibility needed to support diverse neural networks. In the past, chip designers had to line up the tiny RRAM devices in one area next to larger silicon neurons. The RRAM devices and the neurons were hard-wired without programmability, so the computation could only be performed in a single direction. To support neural networks with bidirectional computation, extra wires and circuits were necessary, inflating energy and space needs.

So Wong’s team designed a new chip architecture where the RRAM memory devices and silicon neurons were mixed together. This small change to the design reduced the total area and saved energy.

“I thought [the arrangement] was really beautiful,” said Melika Payvand, a neuromorphic researcher at the Swiss Federal Institute of Technology Zurich. “I definitely consider it a groundbreaking work.”

For several years, Wong’s team worked with collaborators to design, manufacture, test, calibrate and run AI algorithms on the NeuRRAM chip. They did consider using other emerging types of memory that can also be used in a compute-in-memory chip, but RRAM had an edge because of its advantages in analog programming, and because it was relatively easy to integrate with traditional computing materials.

Their recent results represent the first RRAM chip that can run such large and complex AI algorithms — a feat that has previously only been possible in theoretical simulations. “When it comes to real silicon, that capability was missing,” said Anup Das, a computer scientist at Drexel University. “This work is the first demonstration.”

“Digital AI systems are flexible and precise, but orders of magnitude less efficient,” said Cauwenberghs. Now, Cauwenberghs said, their flexible, precise and energy-efficient analog RRAM chip has “bridged the gap for the first time.”

The team’s design keeps the NeuRRAM chip tiny — just the size of a fingernail — while squeezing 3 million RRAM memory devices that can serve as analog processors. And while it can run neural networks at least as well as digital computers do, the chip also (and for the first time) can run algorithms that perform computations in different directions. Their chip can input a voltage to the rows of the RRAM array and read outputs from the columns as is standard for RRAM chips, but it can also do it backward from the columns to the rows, so it can be used in neural networks that operate with data flowing in different directions.

As with RRAM technology itself, this has long been possible, but no one thought to do it. “Why didn’t we think about this before?” Payvand asked. “In hindsight, I don’t know.”

“This actually opens up a lot of other opportunities,” said Das. As examples, he mentioned the ability of a simple system to run the enormous algorithms needed for multidimensional physics simulations or self-driving cars.

Yet size is an issue. The largest neural networks now contain billions of weights, not the millions contained in the new chips. Wong plans to scale up by stacking multiple NeuRRAM chips on top of each other.

It will be just as important to keep the energy costs low in future devices, or to scale them down even further. One way to get there is by copying the brain even more closely to adopt the communication signal used between real neurons: the electrical spike. It’s a signal fired off from one neuron to another when the difference in the voltage between the inside and outside of the cell reaches a critical threshold.

“There are big challenges there,” said Tony Kenyon, a nanotechnology researcher at University College London. “But we still might want to move in that direction, because … chances are that you will have greater energy efficiency if you’re using very sparse spikes.” To run algorithms that spike on the current NeuRRAM chip would likely require a totally different architecture, though, Kenyon noted.

For now, the energy efficiency the team accomplished while running large AI algorithms on the NeuRRAM chip has created new hope that memory technologies may represent the future of computing with AI. Maybe one day we’ll even be able to match the human brain’s 86 billion neurons and the trillions of synapses that connect them without running out of power.

Contributing Writer

November 10, 2022

Comments

0 comment