views

A sea otter in the style of Girl with a Pearl Earring by Johannes Vermeer, created with Dall-E.A sea otter in the style of Girl with a Pearl Earring by Johannes Vermeer, created with Dall-E.

Image-generators such as Dall-E 2 can produce pictures on any theme you wish for in seconds. Some creatives are alarmed but others are sceptical of the hype

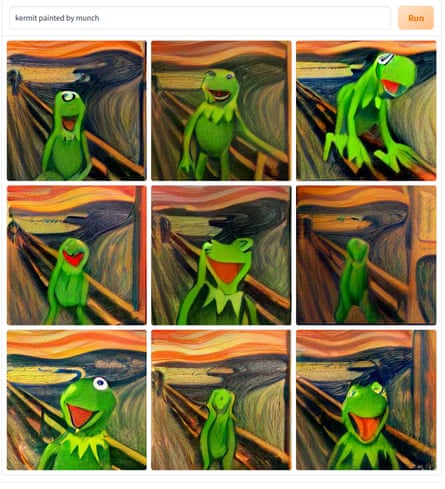

When the concept artist and illustrator RJ Palmer first witnessed the fine-tuned photorealism of compositions produced by the AI image generator Dall-E 2, his feeling was one of unease. The tool, released by the AI research company OpenAI, showed a marked improvement on 2021’s Dall-E, and was quickly followed by rivals such as Stable Diffusion and Midjourney. Type in any surreal prompt, from Kermit the frog in the style of Edvard Munch, to Gollum from The Lord of the Rings feasting on a slice of watermelon, and these tools will return a startlingly accurate depiction moments later.

The internet revelled in the meme-making opportunities, with a Twitter account documenting “weird Dall-E generations” racking up more than a million followers. Cosmopolitan trumpeted the world’s first AI-generated magazine cover, and technology investors fell over themselves to wave in the new era of “generative AI”. The image-generation capabilities have already spread to video, with the release of Google’s Imagen Video and Meta’s Make-A-Video.

But AI’s new artistic prowess wasn’t received so ecstatically by some creatives. “The main concern for me is what this does to the future of not just my industry, but creative human industries in general,” says Palmer.

In June, Cosmopolitan published the first AI-generated magazine cover, a collaboration between digital artist Karen X Cheng and OpenAI.

In June, Cosmopolitan published the first AI-generated magazine cover, a collaboration between digital artist Karen X Cheng and OpenAI.

By ingesting large datasets in order to analyse patterns and build predictive models, AI has long proved itself superior to humans at some tasks. It’s this number-crunching nous that led an AI to trounce the world Go champion back in 2016, rapidly computing the most advantageous game strategy, and unafraid to execute moves that would have elicited scoffs had they come from a person. But until recently, producing original output, especially creative work, was considered a distinctly human pursuit.

Recent improvements in AI have shifted the dial. Not only can AI image generators now transpose written phrases into novel pictures, but strides have been made in AI speech-generation too: large language models such as GPT-3 have reached a level of fluency that convinced at least one recently fired Google researcher of machine sentience. Plug in Bach’s oeuvre, and an AI can improvise music in more or less the same style – with the caveat that it would often be impossible for a human orchestra to actually play.

AI image tools still struggle with rendering hands that look human, and body proportions can be off

This class of technology is known as generative AI, and it works through a process known as diffusion. Essentially, huge datasets are scraped together to train the AI, and through a technical process the AI is able to devise new content that resembles the training data but isn’t identical. Once it has seen millions of pictures of dogs tagged with the word “dog”, it is able to lay down pixels in the shape of an entirely novel pup that resembles the dataset closely enough that we would have no issue labelling it a dog. It’s not perfect – AI image tools still struggle with rendering hands that look human, body proportions can be off, and they have a habit of producing nonsense writing.

While internet users have embraced this supercharged creative potential – armed with the correctly refined prompt, even novices can now create arresting digital canvases – some artists have balked at the new technology’s capacity for mimicry. Among the prompts entered into image generators Stable Diffusion and Midjourney, many tag an artist’s name in order to ensure a more aesthetically pleasing style for the resulting image. Something as mundane as a bowl of oranges can become eye-catching if rendered in the style of, say, Picasso. Because the AI has been trained on billions of images, some of which are copyrighted works by living artists, it can generally create a pretty faithful approximation.

‘Kermit the frog painted by Munch’, created by Floris Groesz with Dall-E software. Photograph: @SirJanosFroglez

‘Kermit the frog painted by Munch’, created by Floris Groesz with Dall-E software. Photograph: @SirJanosFroglez

Some are outraged at what they consider theft of their artistic trademark. Greg Rutkowski, a concept artist and illustrator well known for his golden-light infused epic fantasy scenes, has already been mentioned in hundreds of thousands of prompts used across Midjourney and Stable Diffusion. “It’s been just a month. What about in a year? I probably won’t be able to find my work out there because [the internet] will be flooded with AI art,” Rutkowski told MIT Technology Review. “That’s concerning.”

Dall-E 2 is a black box, with OpenAI refusing to release the code or share the data that the tools were trained on. But Stable Diffusion has chosen to open source its code and share details of the database of images used to train its model.

Spawning, an artist collective, has built a tool called Have I Been Trained? to help artists discover if their artworks were among the 5.8bn images used to train Stable Diffusion, and to opt in or out of appearing in future training sets. The company behind Stable Diffusion, Stability AI, has said it is open to working with the tool. Of the 1,800 artists that have signed up to use the tool already, Matthew Dryhurst, an academic and member of Spawning says it’s a 60/40 split in favour of opt-out.

But the Concept Art Association (CAA) stresses that the damage has already been done this time around, because the tools have already been trained on artists’ work without their consent. “It’s like someone who already robbed you saying, ‘Do you want to opt out of me robbing you?’” says Karla Ortiz, an illustrator, and board member of CAA.

Stability AI’s Emad Mostaque says that although the data used to train Stable Diffusion didn’t offer an opt-out option, it “was very much a test model, heavily unoptimised on a snapshot of images on the internet.” He says new models are typically trained on fresh datasets and this is when the company would take artists’ requests into consideration.

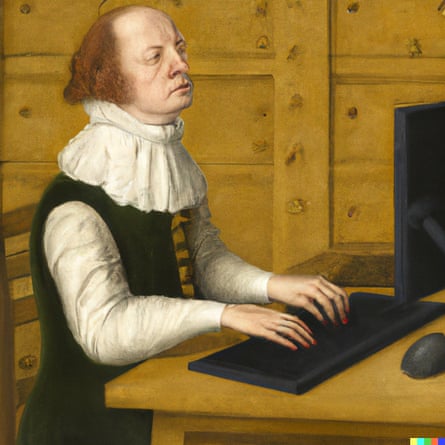

A ‘renaissance painting of a person sitting an office cubicle, typing on a keyboard, stressed’, created by Dall-E.

A ‘renaissance painting of a person sitting an office cubicle, typing on a keyboard, stressed’, created by Dall-E.

It’s not just artworks: analysis of the training database for Stable Diffusion has revealed it also sucked up private medical photography, photos of members of the public (sometimes alongside their full names), and pornography.

Ortiz particularly objects to Stability AI commercialising part of its operation – DreamStudio, which offers customers custom models and enhanced ease of use. “These companies have now set a precedent that you use everyone’s copyrighted and private data without anyone even opting in,” she says. “Then they say: ‘We can’t do anything about it, the genie’s out of the bottle!’”

What can be done about this beyond relying on the beneficence of the companies behind these tools is still in question.

The CAA cites worrying UK legislation that might allow AI companies even greater freedom to suck up copyrighted creative works to train tools that can then be deployed commercially. In the US, the organisation has met government officials to speak about copyright law, and is currently in talks with Washington lobbyists to discuss how to push back on this as an industry.

Beyond copycatting, there’s the even bigger issue pinpointed by Palmer: do these tools put an entire class of creatives at risk? In some cases, AI may be used in place of stock images – the image library Shutterstock recently made a deal with OpenAI to integrate Dall-E into its product. But Palmer argues that artwork such as illustration for articles, books or album covers may soon face competition from AI, undermining a thriving area of commercial art.

The owners of AI image generators tend to argue that on the contrary, these tools democratise art. “So much of the world is creatively constipated,” the founder of Stability AI, Emad Mostaque, said at a recent event to celebrate a $101m fundraising round, “and we’re going to make it so that they can poop rainbows.” But if everyone can harness AI to create technically masterful images, what does it say about the essence of creativity?

AI can’t handle concepts: collapsing moments in time, memory, thoughts, emotions – all of that is a real human skill

Anna Ridler, an artist known for her work with AI, says that despite Dall-E 2 feeling “like magic” the first time you use it, so far she hasn’t felt a spark of inspiration in her experiments with the tool. She prefers working with another kind of AI called generative adversarial networks (GANs). GANs work as an exchange between two networks, one creating new imagery, and the other deciding how well the image meets a specified goal. An artistic GAN might have the goal of creating something that is as different as possible from its training data without leaving the category of what humans would consider visual art.

These issues have intensified debate around the extent to which we can credit AI with creativity. According to Marcus du Sautoy, an Oxford University mathematician and author of The Creativity Code: How AI is Learning to Write, Paint and Think, Dall-E and other image generators probably come closest to replicating a kind of “combinational” creativity, because the algorithms are taught to create novel images in the same style as millions of others in the training data. GANs of the kind Ridler works with are closer to “transformational” creativity, he says – creating something in an entirely novel style.

A Dall-E generated image of “a vintage photo of a corgi on a beach” – showing that the software can also create realistic looking images.

A Dall-E generated image of “a vintage photo of a corgi on a beach” – showing that the software can also create realistic looking images.

Ridler objects to such a formulaic approach to defining creativity. “It flattens it down into thinking of art as interesting wallpaper, rather than something that is trying to express ideas and search for truth,” she says. As a conceptual artist, she is well aware of AI’s shortcomings. “AI can’t handle concepts: collapsing moments in time, memory, thoughts, emotions – all of that is a real human skill, that makes a piece of art rather than something that visually looks pretty.”

AI image tools demonstrate some of these deficiencies. While “astronaut riding a horse” will return an accurate rendering, “horse riding an astronaut” will return images that look much the same – indicating that AI doesn’t really grasp the causal relationships between different actors in the world.

Dryhurst and Ridler contend the “artist replacement” idea stems from underestimating the artistic process. Dryhurst laments what he sees as the media whipping up alarmist narratives, highlighting a recent New York Times article about an artist who used Midjourney to win the digital category of the Colorado state fair’s annual art competition. Dryhurst points out that a state fair is not exactly a prestigious forum. “They were giving out prizes for canned fruit,” he says. “What annoys me is that there seems to be this kind of thirst to scare artists.”

“Art is dead, dude,” said the state fair winner.

It is possible that the hype around these tools as disruptive forces outstrips reality. Mostaque says AI image generators are part of what he calls “intelligent media”, which represents a “one trillion dollar” opportunity, citing Disney’s content budget of more than $10bn (£8.7bn), and the entire games industry’s value of more than $170bn. “Every single piece of content from the BBC to Disney will be made interactive by these models,” he says.

Comments

0 comment